Goroutines Demystified

- Published on

- -6 mins read

- Authors

- Name

- Shripad Mhetre

- Github

- @ShripadMhetre

Table of Contents

I have recently started working with Golang and it has got me hooked immediately. Coming from Java, JavaScript background and knowing the goods & bads of these great programming languages, Golang really tries to improve upon some of the downsides of these. Fast speed (Go being compiled language built on C), Binaries quicker to run/startup, Good built-in library support, unique approach to error/exception handling, concept of pointers at the same time having garbage collection being taken care of are to name a few.

But, what has really made Go stand out from others is its Concurrency capabilities (All thanks to Goroutines!!)

Before starting with the Goroutines & the Golang way of managing concurrency / multithreading, let's get some basics clear.

Concurrency vs Parallelism

Concurrency is a way to handle multiple tasks at once by efficiently utilizing the available resources.

Parallelism is all about executing multiple jobs independently in parallel at the same given time.

So is Concurrency same as Parallelism?

A big NO, Concurrency doesn't necessarily mean multiple tasks will run at the same instant. CPU’s either have a single core or multiple cores. On a single core CPU, each task is given a time window to run on the processor, then it would be replaced with another task to be executed on that processor. Since it’s getting executed in almost no time, we as human wouldn’t even notice those processes have been overlapping or got executed one after the other.

In case of multicore CPU, Parallelism comes into picture. The CPU can now run multiple tasks at the same time on different processors.

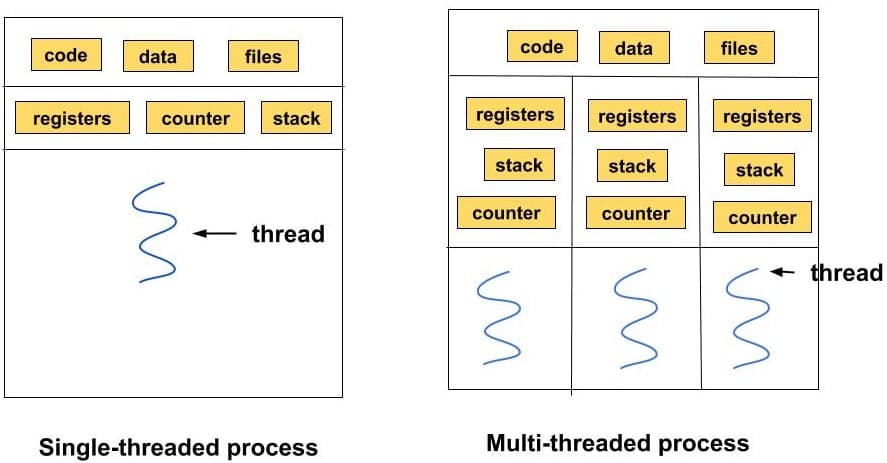

What is thread?

A thread of execution is the smallest sequence of programmed instructions that can be managed independently by a scheduler. (i.e. it's an execution context, which is all the information a CPU needs to execute a stream of instructions.)

Now lets address the actual part for which this post is written for.

Goroutines: The Golang way of handling Concurrency

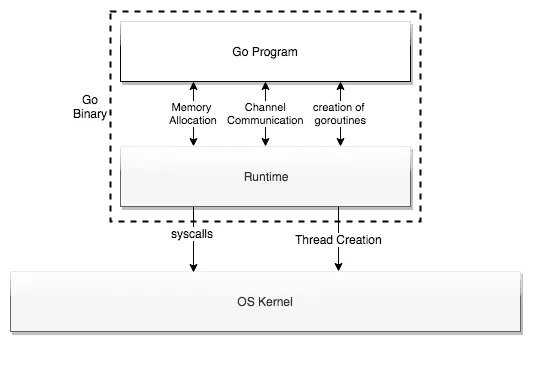

Goroutines are the abstraction over "OS/native threads” managed by the go runtime. They are very lightweight & enable us to create asynchronous parallel programs that can execute some tasks far quicker than if they were written in a sequential manner.

Goroutines are typically multiplexed onto a very small number of OS threads which typically mean concurrent go programs require less resources in order to provide the same level of performance as languages such as Java. Creating a thousand goroutines would typically require one or two OS threads at most, whereas if we were to do the same thing in java it would require 1000 full threads each taking a minimum of 1Mb of Heap space.

Goroutines are far smaller than threads, they typically take around 2kB of stack space (which grows as and when needed) to initialize compared to an OS thread which takes 1Mb by default.

By mapping number of goroutines onto a single thread we don’t have to worry about the performance hit in our application due to zero context switch happening at the kernal level.

The Go FAQ states as below -

Goroutines are part of making concurrency easy to use. The idea, which has been around for a while, is to multiplex independently executing functions—coroutines—onto a set of threads. When a coroutine blocks, such as by calling a blocking system call, the run-time automatically moves other coroutines on the same operating system thread to a different, runnable thread so they won't be blocked. The programmer sees none of this, which is the point. The result, which we call goroutines, can be very cheap: they have little overhead beyond the memory for the stack, which is just a few kilobytes.

To make the stacks small, Go's run-time uses resizable, bounded stacks. A newly minted goroutine is given a few kilobytes, which is almost always enough. When it isn't, the run-time grows (and shrinks) the memory for storing the stack automatically, allowing many goroutines to live in a modest amount of memory. The CPU overhead averages about three cheap instructions per function call. It is practical to create hundreds of thousands of goroutines in the same address space. If goroutines were just threads, system resources would run out at a much smaller number.

Go Runtime :

Go runtime has it's own Scheduler which runs in the user space above kernel. OS Scheduler is a preemptive scheduler, But Go scheduler is not. It's a Cooperative scheduler which means another goroutine will only be scheduled if the current one is blocking or done. To us, go scheduler might feel like the preemptive one as the decision making for the cooperative scheduling doesn't rest in the hands of developers, But in the hands of Go runtime.

Golang uses GOMAXPROCS parameter from its runtime (runtime.GOMAXPROCS) to determine the number of operating system threads allocated to goroutines in your program. The default value being the number of CPU cores available.

Now let's look at the basic goroutine example taken from Go by Examples -

package main

import (

"fmt"

"time"

)

func f(from string) {

for i := 0; i < 3; i++ {

fmt.Println(from, ":", i)

}

}

func main() {

f("Synchronous")

go f("Goroutine")

go func(msg string) {

fmt.Println(msg)

}("Anonymous goroutine function called")

time.Sleep(time.Second)

fmt.Println("done")

}

Output:

$ go run main.go

Synchronous : 0

Synchronous : 1

Synchronous : 2

Goroutine : 0

Goroutine : 1

Anonymous goroutine function called

Goroutine : 2

done

Note:

- In this example, after synchronous function call

f("Synchronous), goroutine output sequence can differ. It depends on the go runtime & its scheduler. time.Sleepis not a good way of dealing with goroutines. Instead Check out sync.WaitGroup for better synchronization of goroutines.

So how do we communicate between Goroutines?

Answer is Go Channels. If you’re interested, you can get basic hands-on understanding through this Tour of Concurrency in Go .

And that's a wrap. Thanks for sticking till the end!

Happy Coding